AI Agent Observability Explained: Key Concepts and Standards

AI agent observability has become a critical discipline for organizations deploying autonomous AI systems at scale. This guide explores the emerging standards and best practices for monitoring, analyzing, and improving AI agent performance in enterprise environments.

What is an AI agent

An AI agent is an application that uses a combination of Large Language Model (LLM) capabilities, tools to connect to the external world, and high-level reasoning to achieve specific goals autonomously. For more details, see Google: What is an AI agent? and IBM: What are AI agents?. Alternatively, agents can be defined as systems where LLMs dynamically direct their own processes and tool usage, maintaining control over how they accomplish tasks.

AI agents differ from simple LLM applications by their ability to:

- Make complex decisions based on context and feedback

- Use tools and APIs to interact with external systems

- Plan multi-step sequences to achieve goals

- Maintain state and memory across interactions

- Self-correct and adapt their approach when facing obstacles

Understanding AI Agents and Their Observability Needs

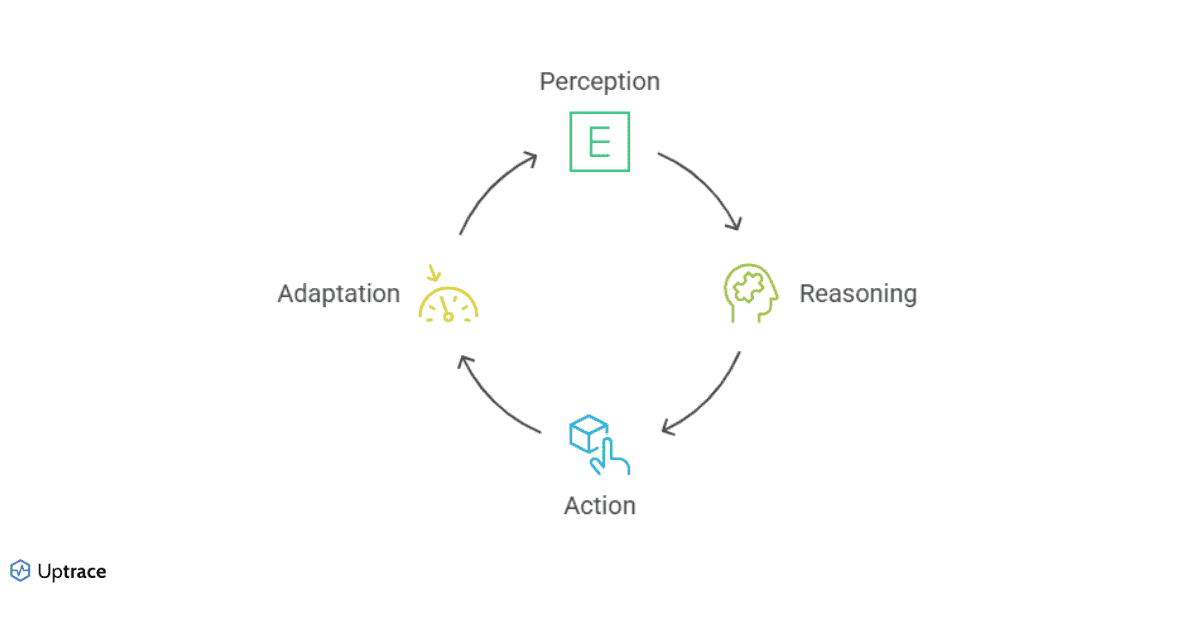

AI agents combine LLM capabilities with external tools and reasoning mechanisms to autonomously achieve specific goals. Unlike traditional software systems, AI agents make decisions that can vary based on context, input variations, and inherent randomness in their outputs.

A typical AI agent architecture includes LLMs as reasoning engines, external tools for real-world interaction, planning mechanisms, and memory components. This complexity creates unique observability requirements. Organizations need visibility not just into performance metrics, but also into decision paths and reasoning processes.

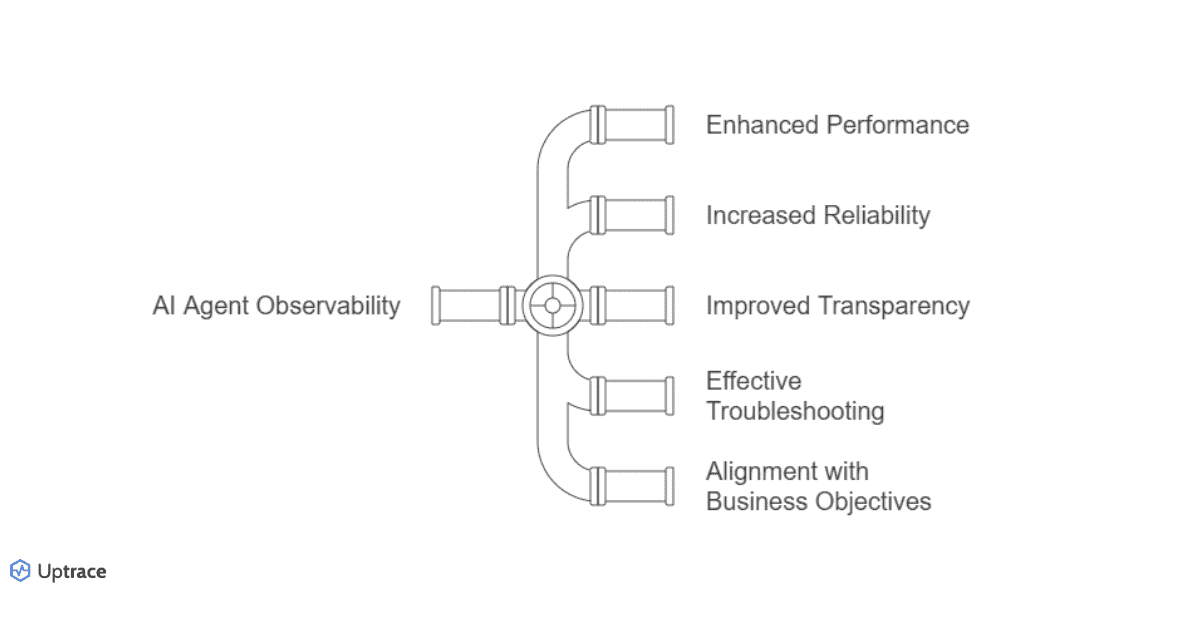

Observability serves two critical functions for AI agents. First, it enables operational monitoring—detecting performance issues, errors, and bottlenecks. Second, it creates a feedback loop for quality improvement, where telemetry data helps enhance agent capabilities over time.

Without robust observability solutions, organizations struggle to troubleshoot complex AI workflows, scale reliably, improve efficiency, or maintain the transparency necessary for stakeholder trust.

The Current Fragmented Landscape

The 2025 observability landscape remains fragmented despite significant progress. Some frameworks offer built-in instrumentation, while others rely on integration with external tools. This disjointed approach creates several challenges:

- The lack of consistent telemetry formats makes it difficult to compare agents across different frameworks

- Vendor lock-in becomes a risk when telemetry is tied to proprietary formats

- Integration complexity increases costs and technical debt

- Perhaps most importantly, organizations cannot easily benchmark performance across different implementations

Distinguishing Applications from Frameworks

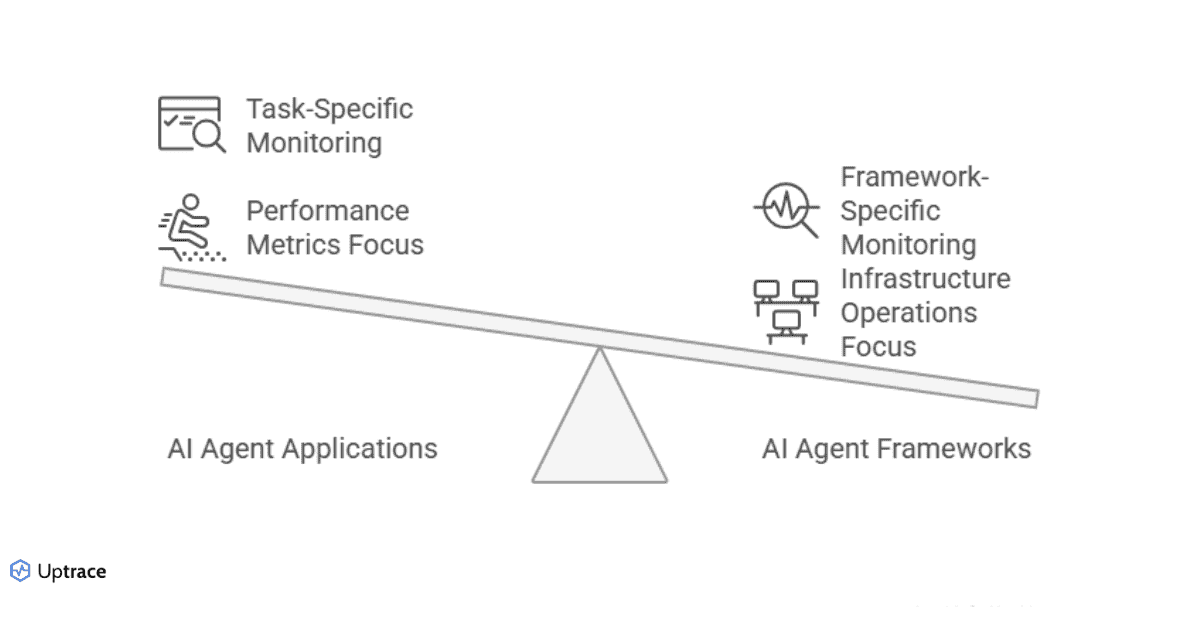

When implementing observability, it's important to distinguish between AI agent applications and frameworks:

- AI agent applications are individual autonomous entities performing specific tasks. Their observability needs focus on performance, accuracy, and resource usage.

- AI agent frameworks provide the infrastructure to develop and deploy agents (examples include IBM Bee AI, CrewAI, AutoGen, and LangGraph). Framework observability must capture not just application metrics but also framework-specific operations.

OpenTelemetry's Standardization Efforts

The GenAI observability project within OpenTelemetry is addressing fragmentation by developing semantic conventions for AI agent telemetry. Their work focuses on two key areas:

- Agent Application Semantic Convention, based on Google's AI agent white paper, establishes foundational standards for performance metrics, trace formats, and logging. This foundation ensures consistent monitoring across different agent implementations.

- Agent Framework Semantic Convention aims to standardize reporting across frameworks while allowing for vendor-specific extensions. This balance between standardization and flexibility is crucial for ecosystem growth.

Implementation Approaches for Framework Developers

Framework developers have two primary options for implementing observability:

Baked-in Instrumentation

Some frameworks like CrewAI incorporate built-in observability using OpenTelemetry conventions. This approach simplifies adoption for users unfamiliar with observability tooling and ensures immediate support for new features. However, it can add bloat to the framework and limit customization options for advanced users.

Framework developers choosing this path should make telemetry configurable, plan for compatibility with external instrumentation, and consider listing their framework in the OpenTelemetry registry.

External OpenTelemetry Instrumentation

The alternative approach uses separate instrumentation libraries that can be imported and configured as needed. This can be implemented either through dedicated repositories (like Traceloop and Langtrace) or through OpenTelemetry's own repositories.

This approach reduces framework bloat and leverages community maintenance, but risks fragmentation through incompatible packages. Developers should ensure compatibility with popular libraries, provide clear documentation, and align with existing standards.

Implementing AI Agent Observability with Uptrace

Uptrace provides a comprehensive solution for monitoring AI agents using OpenTelemetry standards. The platform offers several key capabilities essential for effective agent observability:

- Standardized data collection supports OpenTelemetry's semantic conventions for AI agents, ensuring consistent telemetry regardless of your framework choice

- End-to-end tracing visualizes the complete execution path from initial prompt to final action, helping identify bottlenecks and errors

- LLM-specific metrics including token usage, latency, and other AI-specific metrics help optimize performance and control costs

- Intelligent alerting for unusual behavior patterns and performance degradation helps catch issues before they impact users

- Custom dashboards unify metrics, traces, and logs for a complete view of agent performance, simplifying troubleshooting and optimization

Future Developments in AI Agent Observability

The field continues to evolve rapidly in several directions. We anticipate more comprehensive semantic conventions that address edge cases in agent behavior. Unified framework standards will improve interoperability, while tighter integration with AI model observability will provide deeper insights.

Advanced tooling for monitoring and debugging will make troubleshooting more intuitive, and standardized performance metrics will enable better comparisons across implementations.

Key Takeaways

✓ AI agent observability provides essential visibility into autonomous AI systems through standardized monitoring approaches.

✓ Two critical standards are emerging: Agent Application Semantic Convention and Agent Framework Semantic Convention.

✓ Framework developers can choose between baked-in instrumentation and external OpenTelemetry instrumentation.

✓ Uptrace integrates AI agent observability with broader system monitoring for comprehensive visibility.

✓ The future of AI agent observability lies in standardization, integration, and advanced tooling.

FAQ

- What is the difference between AI agent monitoring and traditional application monitoring?

AI agent monitoring requires visibility into decision paths, reasoning processes, and tool usage that traditional APM solutions don't cover. The non-deterministic nature of AI agents also necessitates specialized observability approaches. - Which SNMP version is recommended for AI agent observability?

SNMP is not typically used for AI agent observability. Instead, OpenTelemetry has emerged as the standard for collecting telemetry from AI agents due to its flexibility and rich semantics. - How does AI agent observability impact agent performance?

When properly implemented, observability should have minimal impact on performance. However, excessive instrumentation can introduce latency, especially in high-throughput scenarios. It's important to balance observability needs with performance requirements. - Can AI agent observability work in air-gapped environments?

Yes, AI agent observability solutions can be deployed in air-gapped environments by installing all components locally. This includes the OpenTelemetry Collector, Uptrace, and any necessary instrumentation libraries. - How do I choose between baked-in and external instrumentation for my AI agent framework?

Consider your users' technical expertise, deployment scenarios, and customization needs. Baked-in instrumentation works best for frameworks targeting users with limited observability experience, while external instrumentation offers more flexibility for advanced users.

You may also be interested in: