OpenTelemetry for AI Systems: Implementation Guide

AI systems, from machine learning models to Large Language Models (LLMs) and autonomous AI agents, introduce unique observability challenges. Their non-deterministic nature, complex dependencies, and specialized performance characteristics require thoughtful instrumentation approaches. OpenTelemetry has emerged as the leading standard for implementing observability across these systems.

This guide provides practical implementation steps for adding OpenTelemetry instrumentation to AI systems, with a focus on LLMs and AI agents.

The Challenge of AI System Observability

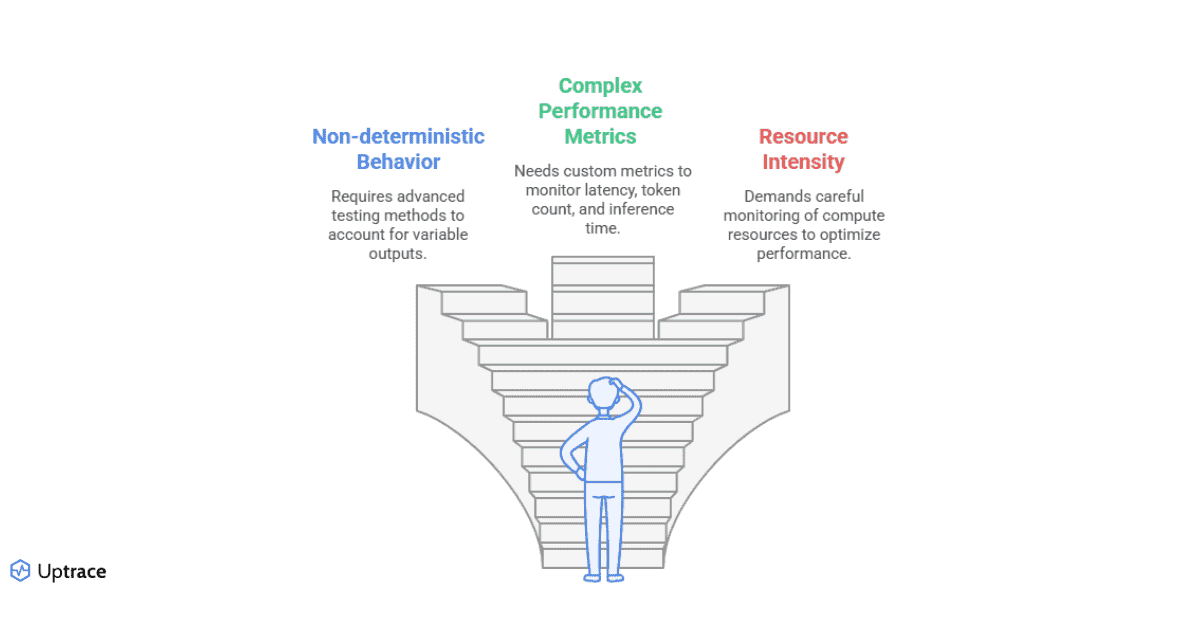

AI systems differ from traditional applications in several important ways that impact observability:

- Non-deterministic behavior: The same input can produce different outputs, making deterministic testing insufficient

- Complex performance characteristics: Metrics like latency, token count, and inference time are critical but non-standard

- Resource intensity: AI operations are typically resource-intensive, requiring careful monitoring of compute resources

- Chain-of-thought visibility: Understanding reasoning paths and decision processes is essential for complex AI systems

- Input/output correlation: Maintaining relationships between inputs and outputs is necessary for analyzing model behavior

OpenTelemetry's flexible, vendor-neutral approach makes it ideal for addressing these challenges while avoiding lock-in to specific monitoring platforms.

OpenTelemetry Components for AI Systems

Let's understand how OpenTelemetry's core components apply to AI system observability:

Telemetry Types

OpenTelemetry collects three types of telemetry data, each serving different purposes in AI system monitoring:

- Metrics: Quantitative measurements like request rate, token usage, latency, and hardware utilization

- Traces: Request flows showing the path through system components, including model inference steps

- Logs: Event records containing detailed information about specific occurrences

Check out more details about opentelemetry's architecture.

For AI systems, these telemetry types are complemented by AI-specific data like prompt templates, token counts, and model configurations.

Semantic Conventions

OpenTelemetry defines standard naming conventions for telemetry data. For AI systems, the GenAI Special Interest Group has developed specific conventions covering:

- LLM operations and metrics

- Vector database interactions

- AI agent activities and decision processes

- Model training and inference

These conventions ensure consistency in how AI-related telemetry is collected and structured.

Context Propagation

Context propagation is particularly important for AI systems where requests may flow through multiple components:

- From user interfaces to AI backends

- Through prompt engineering and retrieval components

- Between different LLMs in a chain

- From LLMs to external tools in agent workflows

OpenTelemetry's context propagation allows these relationships to be captured and preserved.

Instrumenting LLM Applications

Let's start with implementing OpenTelemetry in LLM applications, which form the foundation of most AI systems.

Python Implementation

Python is the most common language for LLM applications. Here's how to implement OpenTelemetry in Python LLM code:

from opentelemetry import trace

from opentelemetry.exporter.otlp.proto.grpc.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk.resources import SERVICE_NAME, Resource

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.semconv.ai import SpanAttributes

# Configure the tracer

resource = Resource(attributes={SERVICE_NAME: "llm-service"})

tracer_provider = TracerProvider(resource=resource)

otlp_exporter = OTLPSpanExporter(endpoint="otel-collector:4317", insecure=True)

span_processor = BatchSpanProcessor(otlp_exporter)

tracer_provider.add_span_processor(span_processor)

trace.set_tracer_provider(tracer_provider)

tracer = trace.get_tracer(__name__)

# Instrument LLM call

async def generate_text(prompt, model="gpt-4", temperature=0.7, max_tokens=500):

with tracer.start_as_current_span("llm.generate") as span:

# Add AI-specific attributes to the span

span.set_attribute(SpanAttributes.LLM_MODEL_NAME, model)

span.set_attribute(SpanAttributes.LLM_PROMPT, prompt)

span.set_attribute(SpanAttributes.LLM_TEMPERATURE, temperature)

span.set_attribute(SpanAttributes.LLM_MAX_TOKENS, max_tokens)

start_time = time.time()

try:

# Actual LLM call (example with OpenAI)

response = await client.chat.completions.create(

model=model,

messages=[{"role": "user", "content": prompt}],

temperature=temperature,

max_tokens=max_tokens

)

# Capture response attributes

completion = response.choices[0].message.content

span.set_attribute(SpanAttributes.LLM_COMPLETION, completion)

span.set_attribute(SpanAttributes.LLM_COMPLETION_TOKENS, response.usage.completion_tokens)

span.set_attribute(SpanAttributes.LLM_PROMPT_TOKENS, response.usage.prompt_tokens)

span.set_attribute(SpanAttributes.LLM_TOTAL_TOKENS, response.usage.total_tokens)

return completion

except Exception as e:

span.record_exception(e)

span.set_status(Status(StatusCode.ERROR, str(e)))

raise

finally:

end_time = time.time()

duration_ms = (end_time - start_time) * 1000

span.set_attribute(SpanAttributes.LLM_LATENCY, duration_ms)

This code demonstrates how to wrap an LLM API call with OpenTelemetry tracing, capturing important attributes about the request and response.

Instrumenting LLM Frameworks

Many developers use frameworks like LangChain or LlamaIndex. These frameworks can be instrumented using specialized OpenTelemetry libraries:

# Installing LangChain instrumentation

pip install opentelemetry-instrumentation-langchain

# Using the instrumentation

from opentelemetry.instrumentation.langchain import LangChainInstrumentor

# Initialize the instrumentor

LangChainInstrumentor().instrument()

# Now all LangChain operations will be automatically traced

from langchain.llms import OpenAI

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

llm = OpenAI(temperature=0.9)

prompt = PromptTemplate(

input_variables=["product"],

template="What is a good name for a company that makes {product}?",

)

chain = LLMChain(llm=llm, prompt=prompt)

# This will be automatically traced

result = chain.run("colorful socks")

Similar instrumentations exist for other popular frameworks like LlamaIndex and Haystack.

Instrumenting AI Agents

AI agents add another layer of complexity, as they involve decision processes, tool usage, and multi-step reasoning. Here's how to implement OpenTelemetry for AI agents:

from opentelemetry import trace

from opentelemetry.semconv.ai import SpanAttributes

from opentelemetry.trace.status import Status, StatusCode

tracer = trace.get_tracer(__name__)

class InstrumentedAgent:

def __init__(self, name, tools):

self.name = name

self.tools = tools

async def run(self, task):

with tracer.start_as_current_span("agent.run") as span:

span.set_attribute(SpanAttributes.AGENT_NAME, self.name)

span.set_attribute(SpanAttributes.AGENT_TASK, task)

try:

# Plan generation

with tracer.start_as_current_span("agent.plan") as plan_span:

plan = await self._generate_plan(task)

plan_span.set_attribute(SpanAttributes.AGENT_PLAN, str(plan))

# Execute steps

result = await self._execute_plan(plan, span)

span.set_attribute(SpanAttributes.AGENT_RESULT, str(result))

return result

except Exception as e:

span.record_exception(e)

span.set_status(Status(StatusCode.ERROR, str(e)))

raise

async def _execute_plan(self, plan, parent_span):

results = []

for i, step in enumerate(plan):

with tracer.start_as_current_span(f"agent.step.{i}") as step_span:

step_span.set_attribute(SpanAttributes.AGENT_STEP_NUMBER, i)

step_span.set_attribute(SpanAttributes.AGENT_STEP_DESCRIPTION, step["description"])

if step["tool"] in self.tools:

# Tool execution tracking

with tracer.start_as_current_span("agent.tool_execution") as tool_span:

tool_span.set_attribute(SpanAttributes.AGENT_TOOL_NAME, step["tool"])

tool_span.set_attribute(SpanAttributes.AGENT_TOOL_INPUT, str(step["input"]))

tool_result = await self.tools[step["tool"]](step["input"])

tool_span.set_attribute(SpanAttributes.AGENT_TOOL_OUTPUT, str(tool_result))

step_result = await self._process_tool_result(step, tool_result)

else:

step_result = {"error": f"Tool {step['tool']} not found"}

step_span.set_status(Status(StatusCode.ERROR, f"Tool {step['tool']} not found"))

step_span.set_attribute(SpanAttributes.AGENT_STEP_RESULT, str(step_result))

results.append(step_result)

# Final synthesis of results

with tracer.start_as_current_span("agent.synthesize") as synth_span:

final_result = await self._synthesize_results(results)

synth_span.set_attribute(SpanAttributes.AGENT_SYNTHESIS, str(final_result))

return final_result

This example shows how to instrument different phases of agent execution, including planning, step execution, tool usage, and result synthesis.

Collecting AI-Specific Metrics

Beyond tracing, metrics collection is crucial for AI systems. Here's how to implement metrics for AI workloads:

Step 1: Configure OpenTelemetry Metrics

from opentelemetry import metrics

from opentelemetry.exporter.otlp.proto.grpc.metric_exporter import OTLPMetricExporter

from opentelemetry.sdk.metrics import MeterProvider

from opentelemetry.sdk.metrics.export import PeriodicExportingMetricReader

import time

# Configure metrics

reader = PeriodicExportingMetricReader(

OTLPMetricExporter(endpoint="otel-collector:4317", insecure=True),

export_interval_millis=10000

)

meter_provider = MeterProvider(metric_readers=[reader])

metrics.set_meter_provider(meter_provider)

meter = metrics.get_meter(__name__)

Step 2: Define AI-Specific Metrics

# Create AI-specific counters and gauges

token_counter = meter.create_counter(

name="llm.tokens.count",

description="Number of tokens processed by the LLM",

unit="tokens"

)

prompt_size_histogram = meter.create_histogram(

name="llm.prompt.size",

description="Size distribution of prompts sent to the LLM",

unit="tokens"

)

latency_histogram = meter.create_histogram(

name="llm.response.latency",

description="Latency distribution of LLM responses",

unit="ms"

)

inference_memory = meter.create_gauge(

name="llm.inference.memory",

description="Memory usage during LLM inference",

unit="bytes"

)

Step 3: Implement Metrics Collection in Your Application

# Example implementation for an LLM service

async def process_llm_request(prompt, model):

# Record prompt size

prompt_tokens = count_tokens(prompt)

prompt_size_histogram.record(prompt_tokens, {"model": model})

# Start timing

start_time = time.time()

# Make API call

try:

response = await client.completions.create(

model=model,

prompt=prompt,

max_tokens=500

)

# Record token metrics

token_counter.add(

response.usage.total_tokens,

{"model": model, "type": "total"}

)

return response

finally:

# Always record latency

duration_ms = (time.time() - start_time) * 1000

latency_histogram.record(duration_ms, {"model": model})

# Record memory usage

memory_used = get_process_memory()

inference_memory.set(memory_used, {"model": model})

These metrics provide insights into token usage, latency, and resource consumption—critical aspects of AI system performance.

Configuring the OpenTelemetry Collector

The OpenTelemetry Collector processes and routes telemetry data. For AI systems, here's a recommended configuration:

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

processors:

batch:

timeout: 1s

send_batch_size: 1024

memory_limiter:

check_interval: 1s

limit_mib: 1000

attributes:

actions:

- key: llm.prompt

action: hash

hash_salt: ${env:HASH_SALT}

filter:

metrics:

include:

match_type: regexp

metric_names:

- llm\..*

- agent\..*

resource_attributes:

- Key: service.name

Value: .*-ai-.*

exporters:

otlp:

endpoint: uptrace:14317

tls:

insecure: true

logging:

verbosity: detailed

service:

pipelines:

traces:

receivers: [otlp]

processors: [memory_limiter, attributes, batch]

exporters: [otlp, logging]

metrics:

receivers: [otlp]

processors: [memory_limiter, filter, batch]

exporters: [otlp, logging]

This configuration includes:

- Privacy protection through the attributes processor that hashes sensitive prompt data

- Metrics filtering to focus on AI-specific metrics

- Memory limiting to prevent resource exhaustion from high-volume AI telemetry

- Batch processing for efficiency

- Diagnostic logging during implementation

Implementing with Specific AI Frameworks

Different AI frameworks require specific implementation approaches. Here are some examples:

LangChain

LangChain is one of the most popular frameworks for building LLM applications and agents. Here's how to instrument it:

from langchain.callbacks import OpenTelemetryCallbackHandler

from langchain.chains import LLMChain

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

# Create OpenTelemetry callback

otel_callback = OpenTelemetryCallbackHandler()

# Configure LangChain components

llm = OpenAI(temperature=0.7, callbacks=[otel_callback])

prompt = PromptTemplate(

input_variables=["topic"],

template="Write a short paragraph about {topic}",

)

chain = LLMChain(llm=llm, prompt=prompt, callbacks=[otel_callback])

# Run with tracing enabled

result = chain.run(topic="OpenTelemetry")

For more comprehensive tracing, you can also use the OpenTelemetry instrumentation for LangChain:

from opentelemetry.instrumentation.langchain import LangChainInstrumentor

# This will automatically instrument all LangChain components

LangChainInstrumentor().instrument()

AutoGen and CrewAI

For agent frameworks like AutoGen and CrewAI, similar approaches can be used:

# AutoGen example

from opentelemetry.instrumentation.autogen import AutoGenInstrumentor

# Instrument AutoGen

AutoGenInstrumentor().instrument()

# Now create and use agents as normal

assistant = autogen.AssistantAgent(

name="assistant",

llm_config={"model": "gpt-4"}

)

user_proxy = autogen.UserProxyAgent(

name="user_proxy"

)

user_proxy.initiate_chat(assistant, message="Solve this math problem: 3x + 5 = 17")

# CrewAI example

from crewai import Agent, Task, Crew

from opentelemetry.instrumentation.crewai import CrewAIInstrumentor

# Instrument CrewAI

CrewAIInstrumentor().instrument()

# Define agents and tasks as normal

researcher = Agent(

role="Researcher",

goal="Conduct comprehensive research",

backstory="You're a skilled researcher",

llm=llm

)

# ... rest of CrewAI setup

Custom LLM Implementations

For custom LLM implementations, manual instrumentation is required:

from opentelemetry import trace

from opentelemetry.semconv.ai import SpanAttributes

tracer = trace.get_tracer(__name__)

class MyCustomLLM:

def __init__(self, model_path):

self.model = load_model(model_path)

def generate(self, prompt, max_tokens=100):

with tracer.start_as_current_span("custom_llm.generate") as span:

# Add span attributes

span.set_attribute(SpanAttributes.LLM_PROMPT, prompt)

span.set_attribute(SpanAttributes.LLM_MAX_TOKENS, max_tokens)

span.set_attribute("custom.model_path", self.model_path)

# Generate text

start_time = time.time()

result = self.model.generate_text(prompt, max_tokens=max_tokens)

duration_ms = (time.time() - start_time) * 1000

# Record results

span.set_attribute(SpanAttributes.LLM_COMPLETION, result)

span.set_attribute(SpanAttributes.LLM_LATENCY, duration_ms)

return result

Setting Up Dashboards and Alerts

Once telemetry is flowing, you'll want to visualize it and set up alerts. Here are some recommended Uptrace dashboard panels for AI systems:

Critical AI Metrics to Monitor

- Token Usage Rates

- Total tokens per minute

- Tokens by model type

- Tokens by application

- Latency Profiles

- P50, P95, P99 response times

- Latency distribution by model

- Trends over time

- Error Rates

- Rate of model errors (timeouts, context limits)

- Rate of malformed prompts

- Correlation of errors with specific inputs

- Resource Utilization

- GPU memory usage

- CPU load during inference

- Cache hit ratios for repeated queries

- AI-Specific KPIs

- For RAG systems: retrieval quality metrics

- For agents: task completion rates

- For classification: accuracy/confidence metrics

Alert Recommendations

AI systems require specialized alerting. Consider these alert configurations:

# Example alert configurations

alerts:

- name: High Token Usage Rate

query: rate(sum(llm.tokens.count{type="total"})[5m]) > 1000

for: 5m

severity: warning

description: Token usage exceeding normal rates, check for inefficient prompts or abuse

- name: Model Latency Spike

query: histogram_quantile(0.95, sum(rate(llm.response.latency_bucket[5m])) by (le, model)) > 2000

for: 2m

severity: critical

description: 95th percentile latency exceeding 2 seconds for LLM responses

- name: High Error Rate

query: rate(sum(llm.errors.count)[5m]) / rate(sum(llm.requests.count)[5m]) > 0.05

for: 2m

severity: critical

description: Error rate exceeding 5% for LLM requests

- name: Agent Task Failures

query: sum(rate(agent.task.completion{status="failed"}[10m])) > 0.1

for: 5m

severity: warning

description: AI agent failing to complete assigned tasks

These alerts catch common issues with AI systems before they impact users, focusing on performance, reliability, and resource usage.

Advanced Implementation Techniques

As your AI system monitoring matures, consider these advanced techniques:

Sampling Strategies for LLM Traffic

High-volume LLM traffic can generate excessive telemetry data. Implement intelligent sampling:

from opentelemetry.sdk.trace.sampling import ParentBased, TraceIdRatioBased

# Sample 10% of regular traffic, but capture all errors and all traffic with specific attributes

error_sampler = ParentBased(

root=TraceIdRatioBased(0.1), # Sample 10% of traces by default

remote_parent_sampled=ProbabilitySampler(1.0), # Keep all traces if parent was sampled

remote_parent_not_sampled=ProbabilitySampler(0.1), # Sample 10% even if parent wasn't sampled

local_parent_sampled=ProbabilitySampler(1.0),

local_parent_not_sampled=ProbabilitySampler(0.1)

)

# Add sampling based on specific attributes (e.g., sample all traffic with high token counts)

def decision_fn(context, trace_id):

# Sample all traffic with specific parameter

if context and context.attributes.get("llm.total_tokens", 0) > 1000:

return True

# Use default sampling for everything else

return None

attribute_sampler = FilteringSampler(

delegated=error_sampler,

decision_function=decision_fn

)

# Configure tracer with the sampler

tracer_provider = TracerProvider(

sampler=attribute_sampler,

resource=resource

)

This approach ensures you capture important data while keeping telemetry volumes manageable.

Privacy Protection for Sensitive Prompts

When monitoring AI systems, privacy is crucial. Implement these privacy protections:

# OpenTelemetry Collector configuration for privacy

processors:

attributes:

actions:

# Hash sensitive information

- key: llm.prompt

action: hash

hash_salt: ${env:HASH_SALT}

# Truncate long values

- key: llm.completion

action: truncate

max_length: 100

# Delete certain attributes

- key: user.email

action: delete

# Update to generic values

- key: llm.prompt_template

action: update

value: 'REDACTED_TEMPLATE'

from_attribute: llm.prompt_template

from_context: span

This processor configuration sanitizes sensitive data before it's exported to observability backends.

Contextual Logging Integration

Enhance your traces with contextual logs for deeper insights:

from opentelemetry import trace

from opentelemetry.trace.propagation.tracecontext import TraceContextTextMapPropagator

import logging

import json

# Configure contextual logger

class OpenTelemetryHandler(logging.Handler):

def emit(self, record):

current_span = trace.get_current_span()

if not current_span.is_recording():

return

record_dict = {

'severity': record.levelname,

'message': record.getMessage(),

'logger': record.name

}

if hasattr(record, 'trace_id'):

record_dict['trace_id'] = record.trace_id

else:

ctx = trace.get_current_span().get_span_context()

if ctx.is_valid:

record_dict['trace_id'] = format(ctx.trace_id, '032x')

record_dict['span_id'] = format(ctx.span_id, '016x')

# Add additional context

if hasattr(record, 'llm_model'):

record_dict['llm_model'] = record.llm_model

current_span.add_event(

name="log",

attributes=record_dict

)

# Use in application

logger = logging.getLogger("llm.service")

logger.addHandler(OpenTelemetryHandler())

# When logging in your application

def process_request(prompt, model):

with tracer.start_as_current_span("process_request") as span:

logger.info(

"Processing LLM request",

extra={"llm_model": model}

)

# Process normally...

This logs important events directly to the trace, making debugging easier.

Implementing AI Observability with Uptrace

Uptrace provides a comprehensive platform for monitoring AI systems using OpenTelemetry. Here's how to set it up:

- Deploy Uptrace: Using Docker Compose or Kubernetes

# docker-compose.yml example

version: '3'

services:

uptrace:

image: uptrace/uptrace:latest

volumes:

- ./uptrace.yml:/etc/uptrace/config.yml

ports:

- '14317:14317' # OTLP gRPC

- '14318:14318' # OTLP HTTP

- '8080:8080' # UI

postgres:

image: postgres:15-alpine

environment:

- POSTGRES_PASSWORD=postgres

volumes:

- postgres-data:/var/lib/postgresql/data

clickhouse:

image: clickhouse/clickhouse-server:23

volumes:

- clickhouse-data:/var/lib/clickhouse

ulimits:

nofile:

soft: 262144

hard: 262144

volumes:

postgres-data:

clickhouse-data:

- Configure OpenTelemetry Collector to send data to Uptrace:

exporters:

otlp:

endpoint: uptrace:14317

tls:

insecure: true

- Set up AI-specific dashboards in Uptrace:

Uptrace allows you to create custom dashboards for AI metrics. Here are some useful visualizations:

- Token usage by model

- Response latency distribution

- Error rates by model and request type

- GPU/CPU utilization during inference

- Agent task success rates

- RAG retrieval accuracy metrics

- Configure AI-specific alerts:

Uptrace's alerting system can be configured to catch common AI system issues:

- Abnormal token usage patterns

- Unusual latency spikes

- High error rates

- Resource exhaustion

- Low model confidence scores

Best Practices for AI System Observability

To get the most from your OpenTelemetry implementation for AI systems, follow these best practices:

- Instrument at the right level of abstraction

- Instrument both high-level operations (e.g., "generate response") and low-level details (e.g., token counts, embedding lookups)

- Create a hierarchy of spans that shows the relationship between these operations

- Capture meaningful context

- Include truncated versions of prompts and completions

- Record model parameters that affect performance and behavior

- Store metadata about input sources and output destinations

- Balance detail with volume

- Use sampling to reduce telemetry from high-volume systems

- Retain full detail for errors and anomalies

- Consider different sampling rates for different types of operations

- Correlate across the entire system

- Ensure context propagation from user interfaces through to AI backends

- Maintain trace context through asynchronous operations

- Connect AI operation telemetry with infrastructure metrics

- Monitor for AI-specific issues

- Prompt injection attempts

- Hallucinations and output quality issues

- Token optimization opportunities

- Resource usage patterns specific to AI workloads

Key Takeaways

✓ OpenTelemetry provides a standardized way to monitor AI systems, including LLMs and agents.

✓ AI-specific semantic conventions ensure consistent telemetry across different platforms.

✓ Manual instrumentation offers the most flexibility, while framework-specific instrumentors simplify implementation.

✓ Privacy protection is essential when monitoring systems that process potentially sensitive prompts.

✓ Uptrace offers specialized AI system monitoring capabilities built on OpenTelemetry standards.

FAQ

- How does OpenTelemetry handle the non-deterministic nature of AI systems?

OpenTelemetry captures the context of each execution, including inputs, parameters, and outputs, allowing you to analyze patterns even in non-deterministic systems. By collecting detailed telemetry across many executions, you can identify trends and anomalies in behavior. - Can OpenTelemetry help monitor private LLM deployments?

Yes, OpenTelemetry works with any LLM deployment, including self-hosted models like Llama, Mistral, or private GPT instances. The instrumentation approach is the same, though you may want to collect additional metrics related to infrastructure performance. - How much overhead does OpenTelemetry add to AI systems?

When properly implemented, OpenTelemetry adds minimal overhead—typically less than 3-5% in terms of latency and resource usage. For high-throughput systems, sampling can further reduce this impact while still providing useful insights. - How should I monitor AI agent tools and third-party API calls?

Use nested spans to track each tool call within the context of the larger agent execution, and include attributes that capture the tool's purpose, inputs, and outputs. For third-party APIs, propagate context when possible, or create spans that represent these external calls. - Can OpenTelemetry help identify problematic prompts or agent behaviors?

Yes, by correlating error rates, latency, and other metrics with specific prompts or agent behaviors, you can identify patterns that lead to problems. This data can be used to improve prompts, fine-tune models, or adjust agent logic for better performance.

You may also be interested in: