Querying Metrics

To learn about metrics, see OpenTelemetry Metrics documentation.

Uptrace provides a powerful query language that supports joining, grouping, and aggregating multiple metrics in a single query.

Uptrace aims to be compatible with the Prometheus query language while extending it in a meaningful way. If you're already familiar with PromQL, read PromQL compatibility guide.

Writing queries

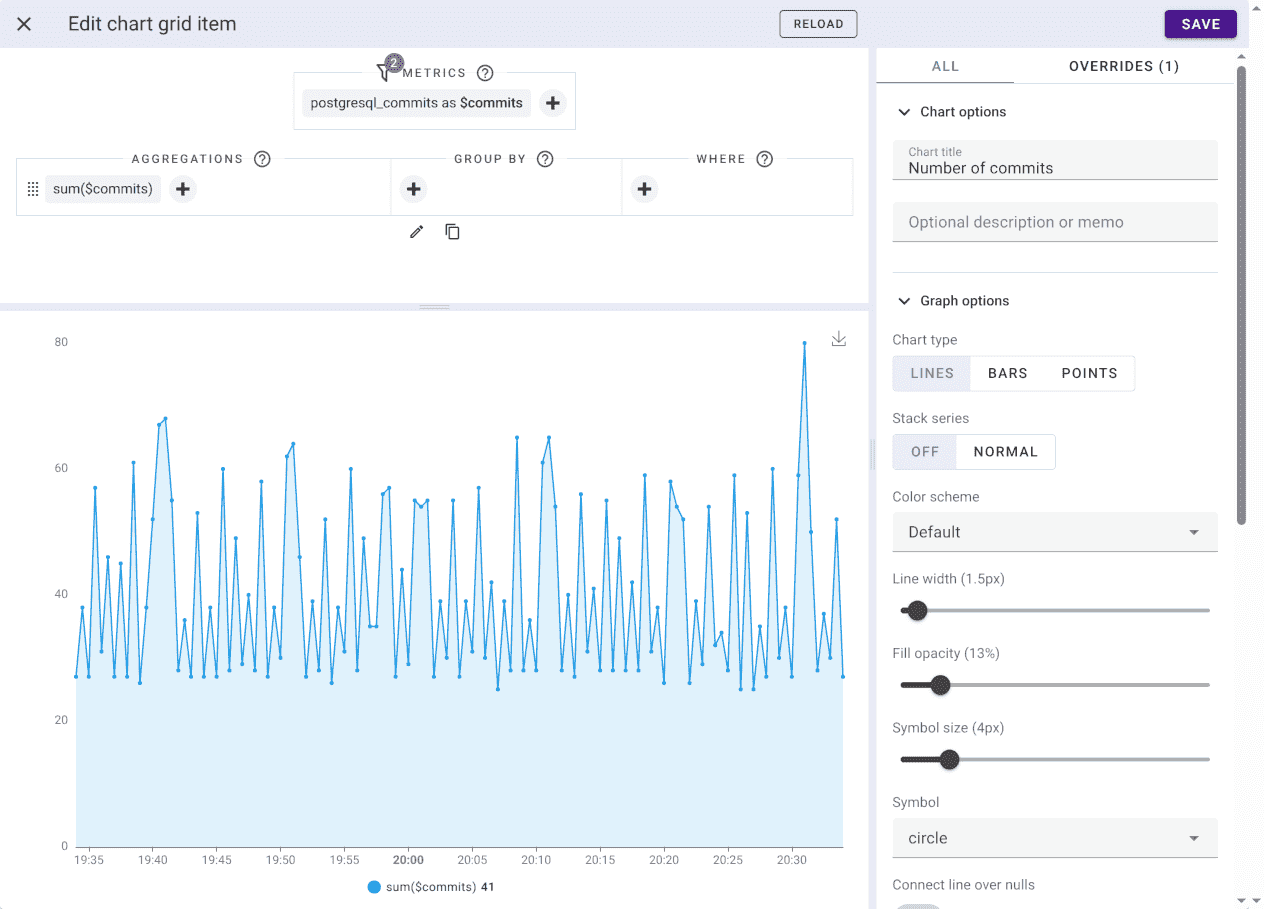

Uptrace allows you to create dashboards using UI or YAML configuration files. This documentation uses the more compact YAML format, but you can achieve the same with the UI.

YAML:

metrics:

- postgresql_commits as $commits

query:

- sum($commits)

UI:

You can find the existing dashboard templates on GitHub.

Aliases

Because metric names can be quite long, Uptrace requires you to provide a short metric alias that must start with the dollar sign:

metrics:

# metric aliases always start with the dollar sign

- system_filesystem_usage as $fs_usage

- system_network_packets as $packets

You must then use the alias instead of the metric name when writing queries:

query:

- sum($fs_usage)

Uptrace also allows to specify an alias for expressions:

query:

- $fs_usage{state="used"} as used_space

- $fs_usage{host_name='host1', device='/dev/sdd1'} as host1_sdd1

You can then reference the expression using the alias:

metrics:

- service_cache_redis as $redis

query:

- $redis{type="hits"} as hits

- $redis{type="misses"} as misses

- hits / (hits + misses) as hit_rate

Grouping

Uptrace allows to customize grouping on the metric and function level:

$metric by (attr1, attr2)

sum($metric by (attr1, attr2))

avg(sum($metric by (attr1, attr2)) by (attr1))

You can also specify grouping for the whole expression:

$metric1 by (type) / $metric2 group by host_name

# The same.

$metric1 by (type, host_name) / $metric2 by (host_name)

And for the whole query affecting all expressions:

$metric1 | metric2 | group by host_name

# The same using expression-wide grouping.

$metric1 group by host_name | $metric2 group by host_name

# Or custom grouping.

$metric1 by (host_name) | $metric2 by (host_name)

Manipulating attributes

You can rename attributes like this:

$metric1 by (deployment_environment as env, service_name as service)

$metric1 | group by deployment_environment as env, service_name as service

To manipulate attribute values, you can use replace and replaceRegexp functions:

group by replace(host_name, 'uptrace-prod-', '') as host

group by replaceRegexp(host, `^`, 'prefix ') as host

group by replaceRegexp(host, `$`, ' suffix') as host

To change strings case, use upper and lower functions:

group by lower(host_name) as host

group by upper(host_name) as host

You can also use a regexp to extract a substring from the attribute value:

group by extract(host_name, `^uptrace-prod-(\w+)$`) as host

Filtering

Uptrace supports all the same filters just like PromQL:

node_cpu_seconds_total{cpu="0",mode="idle"}

node_cpu_seconds_total{cpu!="0",mode~"user|system"}

In addition, you can also add global filters that affect all expressions:

$metric1 | $metric2 | where host = "myhost" | where service = "myservice"

# The same using inline filters.

$metric1{host="myhost",service="myservice"} | $metric2{host="myhost",service="myservice"}

Global filters support the following operators:

=,!=,<,<=,>,>=, for example,where host_name = "myhost".~,!~, for example,where host_name ~ "^prod-[a-z]+-[0-9]+$".like,not like, for example,where host_name like "prod-%".in,not in, for example,where host_name in ("host1", "host2").

Joining

Uptrace supports math between series, for example, to add all equally-labelled series from both sides:

$mem_free + $mem_cached group by host_name, service_name

# The same.

$mem_free by (host_name, service_name) + $mem_cached by (host_name, service_name)

Uptrace also automatically supports one-to-many/many-to-one joins:

# One-to-many

$metric by (type) / $metric by (service_name, type)

# Many-to-one

$metric by (service_name, type) / $metric by (type)

If attribute names don't match, you can rename them like this:

$metric by (hostname as host) + $metric by (host_name as host)

Supported functions

Uptrace supports the following types of functions:

If Uptrace does not support the function you need, please open an issue on GitHub.

Aggregate

Aggregate functions combine multiple timeseries using the specified function and grouping attributes. When possible, aggregation is pushed down to ClickHouse for maximum efficiency.

minmaxsumavgmedian,p50,p75,p90,p99,count. Only histograms.

The count function returns the number of observed values in a histogram. To count the number of timeseries, use uniq($metric, attr1, attr2), which efficiently counts the number of timeseries in the database without selecting all timeseries.

Rollup

Rollup (or range/window) functions calculate rollups over data points in the specified lookbehind window. The number of timeseries and the number of datapoints remain the same.

min_over_time,max_over_time,sum_over_time,avg_over_time,median_over_timerateandirateincreaseanddelta

You can specify the lookbehind window in square brackets, e.g. rate($metric[5i]) where i is equal to the current interval. When omitted, the default lookbehind window is 10i.

Transform

Transform functions operate on each point of each timeseries. The number of timeseries and the number of datapoints remain the same.

absceil,floor,trunccos,cosh,acos,acoshsin,sinh,asin,asinhtan,tanh,atan,atanhexp,exp2ln,log,log2,log10perSecdivides each point by the number of seconds in the grouping interval. You can achieve the same with$metric / _seconds.perMindivides each point by the number of minutes in the grouping interval. You can achieve the same with$metric / _minutes.clamp_min(ts timeseries, min scalar)clampstsvalues to have a lower limit ofmin.clamp_max(ts timeseries, max scalar)clampstsvalues to have an upper limit ofmax.

Attribute manipulation

Additionally, these functions manipulate attributes and can only be used in grouping expressions:

lower(attr)lowers the case of theattrvalue.upper(attr)uppers the case of theattrvalue.trimPrefix(attr, "prefix")removes the provided leadingprefixstring.trimSuffix(attr, "suffix")removes the provided trailingsuffixstring.extract(haystack, pattern)extracts a fragment of thehaystackstring using the regular expressionpattern.replace(haystack, substring, replacement)replaces all occurrences of thesubstringinhaystackby thereplacementstring.replaceRegexp(haystack, pattern, replacement)replaces all occurrences of the substring matching the regular expressionpatterninhaystackby thereplacementstring.

If

The special if function enables conditional branching. If the condition cond evaluates to a value other than zero, the function returns the result of the then expression. If cond evaluates to 0 or NaN, then the result of the else expression is returned.

if(cond, then)

if(cond, then, else)

For example, you can use if to calculate the hit rate only if the number of hits and misses exceeds a certain threshold:

if(

sum($hits) + sum($misses) >= 100,

sum($misses) / (sum($hits) + sum($misses))

) as hit_rate

When you omit the else expression, Uptrace uses the null value. If all timeseries values are null, Uptrace removes such timeseries from the result. If you want to keep all timeseries, you can specify the else expression:

if(cond, else, 1)

if(cond, else, NaN)

Offset

The offset modifier allows to set time offset for the query.

For example, this query retrieves the value of http_requests_total from 5 minutes ago, relative to the query evaluation time:

$http_requests_total offset 5m

A negative offset allows to look ahead of the query evaluation time:

$http_requests_total offset -5m

Instruments

OpenTelemetry offers various instruments, each with its own set of aggregate functions:

| Instrument Name | Timeseries kind |

|---|---|

| Counter, CounterObserver | Counter |

| UpDownCounter, UpDownCounterObserver | Additive |

| GaugeObserver | Gauge |

| Histogram | Histogram |

| AWS CloudWatch | Summary |

Counter

Counter is a timeseries kind that measures additive non-decreasing values, for example, the total number of:

- processed requests

- received bytes

- disk reads

Uptrace supports the following functions to aggregate counter timeseries:

| Expression | Result timeseries |

|---|---|

sum($metric) | Sum of timeseries |

perMin(sum($metric)) | sum($metric) / _minutes |

perSec(sum($metric)) | sum($metric) / _seconds |

Gauge

Gauge is a timeseries kind that measures non-additive values for which sum does not produce a meaningful correct result, for example:

- error rate

- memory utilization

- cache hit rate

Uptrace supports the following functions to aggregate gauge timeseries:

| Expression | Result timeseries |

|---|---|

avg($metric) | Avg of timeseries |

min($metric) | Min of timeseries |

max($metric) | Max of timeseries |

sum($metric) | Sum of timeseries |

perMin(sum($metric)) | sum($metric) / _minutes |

perSec(sum($metric)) | sum($metric) / _seconds |

delta($metric) | Diff between curr and previous values |

* Note that the sum, perMin, perSec, and delta functions should not be normally used with this instrument and were added only for compatibility with Prometheus and AWS metrics. For the same reason, per_min(sum($metric)) and delta(sum($metric)) are also supported.

Additive

Additive is a timeseries kind which measures additive values that increase or decrease with time, for example, the number of:

- active requests

- open connections

- memory in use (megabytes)

Uptrace supports the following functions to aggregate additive timeseries:

| Expression | Result timeseries |

|---|---|

sum($metric) | Sum of timeseries |

avg($metric) | Avg of timeseries |

min($metric) | Min of timeseries |

max($metric) | Max of timeseries |

perMin(sum($metric)) | sum($metric) / _minutes |

perSec(sum($metric)) | sum($metric) / _seconds |

delta($metric) | Diff between curr and previous values |

* Note that the delta function should not be normally used with this instrument and was added only for compatibility with Prometheus and AWS metrics.

Histogram

Histogram is a timeseries kind that contains a histogram from recorded values, for example:

- request latency

- request size

Uptrace supports the following functions to aggregate histogram timeseries:

| Expression | Result timeseries |

|---|---|

count($metric) | Number of observed values in timeseries |

p50($metric) | P50 of timeseries |

p75($metric) | P75 of timeseries |

p90($metric) | P90 of timeseries |

p95($metric) | P95 of timeseries |

p99($metric) | P99 of timeseries |

avg($metric) | sum($metric) / count($metric) |

min($metric) | Min observed value in the histogram |

max($metric) | Max observed value in the histogram |

Note that you can also use perMin(count($metric)) and perSec(count($metric)).

Summary

Sum is a timeseries kind that exists for compatibility with Prometheus and AWS Cloud Watch. It stores the min, max, sum, and count aggregates of observed values.

| Expression | Result timeseries |

|---|---|

sum($metric) | Sum of timeseries |

count($metric) | Number of observed values in timeseries |

avg($metric) | sum($metric) / count($metric) |

min($metric) | Min observed value |

max($metric) | Max observed value |

Note that you can also use perMin(count($metric)) and perSec(count($metric)).

Misc

What are timeseries?

A timeseries is a metric with an unique set of attributes, for example, each host has a separate timeseries for the same metric name:

# metric_name{ attr1, attr2... }

system_filesystem_usage{host_name='host1'} # timeseries 1

system_filesystem_usage{host_name='host2'} # timeseries 2

You can add more attributes to create more detailed and rich timeseries, for example, you can use state attribute to report the number of free and used bytes in a filesystem:

system_filesystem_usage{host_name='host1', state='free'} # timeseries 1

system_filesystem_usage{host_name='host1', state='used'} # timeseries 2

system_filesystem_usage{host_name='host2', state='free'} # timeseries 3

system_filesystem_usage{host_name='host2', state='used'} # timeseries 4

With just 2 attributes, you can write a number of useful queries:

# the filesystem size (free+used bytes) on each host

query:

- sum($fs_usage) group by host_name

# the number of free bytes on each host

query:

- sum($fs_usage{state='free'}) as free group by host_name

# fs utilization on each host

query:

- sum($fs_usage{state='used'}) / sum($fs_usage) as fs_util group by host_name

# the size of your dataset on all hosts

query:

- sum($fs_usage{state='used'}) as dataset_size

Binary operator precedence

The following list shows the precedence of binary operators in Uptrace, from highest to lowest.

^*,/,%+,-==,!=,<=,<,>=,>and,unlessor

Operators on the same precedence level are left-associative. For example, 2 * 3 % 2 is equivalent to (2 * 3) % 2.