OpenTelemetry Log4j logs [Java]

OpenTelemetry Log4j is a log appender that bridges Log4j2 into OpenTelemetry. With this integration, your Log4j logs are automatically correlated with distributed traces and can be exported to any OpenTelemetry-compatible backend.

Two Workflows for Log Instrumentation

OpenTelemetry provides two workflows for consuming Log4j instrumentation:

Direct to Collector

Logs are emitted directly from your application to a collector using OTLP. This approach:

- Is simple to set up with no additional log forwarding components

- Allows emitting structured logs conforming to the OpenTelemetry log data model

- May add overhead for queuing and exporting logs over the network

Use this when: You want a simple setup and your application can handle the export overhead.

Via File or Stdout

Logs are written to files or stdout, then collected by another component (e.g., FluentBit, OpenTelemetry Collector filelog receiver). This approach:

- Has minimal application overhead

- Requires parsing logs to extract structured data

- Needs trace context injected into log output for correlation

Use this when: You have existing log collection infrastructure or need minimal application overhead.

What is OpenTelemetry?

OpenTelemetry is an open-source observability framework that aims to standardize and simplify the collection, processing, and export of telemetry data from applications and systems.

OpenTelemetry supports multiple programming languages and platforms, making it suitable for a wide range of applications and environments.

OpenTelemetry enables developers to instrument their code and collect telemetry data, which can then be exported to various OpenTelemetry backends or observability platforms for analysis and visualization.

Log4j Appender (Direct to Collector)

The OpenTelemetry Log4j appender captures log records and exports them via the OpenTelemetry Logs SDK. This is the recommended approach for sending logs directly to an observability backend.

Installation

Add the dependency to your project:

<dependency>

<groupId>io.opentelemetry.instrumentation</groupId>

<artifactId>opentelemetry-log4j-appender-2.17</artifactId>

<version>2.11.0-alpha</version>

</dependency>

You also need the OpenTelemetry SDK and OTLP exporter:

<dependency>

<groupId>io.opentelemetry</groupId>

<artifactId>opentelemetry-sdk</artifactId>

<version>1.45.0</version>

</dependency>

<dependency>

<groupId>io.opentelemetry</groupId>

<artifactId>opentelemetry-exporter-otlp</artifactId>

<version>1.45.0</version>

</dependency>

Configuration

Configure the appender in your log4j2.xml. Note the packages attribute on the Configuration element:

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="WARN" packages="io.opentelemetry.instrumentation.log4j.appender.v2_17">

<Appenders>

<!-- Console appender for local debugging -->

<Console name="Console" target="SYSTEM_OUT">

<PatternLayout pattern="%d{HH:mm:ss.SSS} [%t] %-5level %logger{36} - %msg%n"/>

</Console>

<!-- OpenTelemetry appender -->

<OpenTelemetry name="OpenTelemetryAppender"

captureExperimentalAttributes="true"

captureMapMessageAttributes="true"

captureMarkerAttribute="true"

captureContextDataAttributes="*"/>

</Appenders>

<Loggers>

<Root level="INFO">

<AppenderRef ref="Console"/>

<AppenderRef ref="OpenTelemetryAppender"/>

</Root>

</Loggers>

</Configuration>

SDK Initialization

Initialize the OpenTelemetry SDK and install the appender in your application startup:

import io.opentelemetry.api.OpenTelemetry;

import io.opentelemetry.exporter.otlp.logs.OtlpGrpcLogRecordExporter;

import io.opentelemetry.instrumentation.log4j.appender.v2_17.OpenTelemetryAppender;

import io.opentelemetry.sdk.OpenTelemetrySdk;

import io.opentelemetry.sdk.logs.SdkLoggerProvider;

import io.opentelemetry.sdk.logs.export.BatchLogRecordProcessor;

import io.opentelemetry.sdk.resources.Resource;

import io.opentelemetry.semconv.ResourceAttributes;

public class Application {

public static void main(String[] args) {

// Configure OTLP exporter

OtlpGrpcLogRecordExporter logExporter = OtlpGrpcLogRecordExporter.builder()

.setEndpoint("https://api.uptrace.dev:4317")

.addHeader("uptrace-dsn", System.getenv("UPTRACE_DSN"))

.build();

// Configure resource

Resource resource = Resource.getDefault().toBuilder()

.put(ResourceAttributes.SERVICE_NAME, "my-java-service")

.put(ResourceAttributes.SERVICE_VERSION, "1.0.0")

.build();

// Build logger provider with batch processor

SdkLoggerProvider loggerProvider = SdkLoggerProvider.builder()

.setResource(resource)

.addLogRecordProcessor(BatchLogRecordProcessor.builder(logExporter).build())

.build();

// Build OpenTelemetry SDK

OpenTelemetrySdk openTelemetrySdk = OpenTelemetrySdk.builder()

.setLoggerProvider(loggerProvider)

.build();

// Install the appender - connects Log4j to OpenTelemetry

OpenTelemetryAppender.install(openTelemetrySdk);

// Your application code

runApplication();

// Shutdown gracefully

openTelemetrySdk.close();

}

}

Appender Options

The OpenTelemetry appender supports these configuration attributes:

| Attribute | Type | Default | Description |

|---|---|---|---|

captureExperimentalAttributes | boolean | false | Capture thread name and ID as attributes |

captureCodeAttributes | boolean | false | Capture source code location (class, method, line) |

captureMapMessageAttributes | boolean | false | Capture MapMessage entries as attributes |

captureMarkerAttribute | boolean | false | Capture Log4j markers as the log4j.marker attribute |

captureContextDataAttributes | string | - | Comma-separated list of MDC keys to capture, or * for all |

captureEventName | boolean | false | Use event.name attribute as the log event name |

numLogsCapturedBeforeOtelInstall | int | 1000 | Number of logs to cache before SDK initialization |

Context Injection (Via File/Stdout)

For applications that already output logs to files or stdout (e.g., for collection by FluentBit or the OpenTelemetry Collector filelog receiver), you can inject trace context into Log4j's MDC. This enables log-trace correlation without changing your log output destination.

Installation

Add the context data autoconfigure dependency:

<dependency>

<groupId>io.opentelemetry.instrumentation</groupId>

<artifactId>opentelemetry-log4j-context-data-2.17-autoconfigure</artifactId>

<version>2.11.0-alpha</version>

<scope>runtime</scope>

</dependency>

Configuration

The module auto-configures via Log4j's ContextDataProvider SPI mechanism. Simply include the dependency and update your log pattern to include trace context using %X{key} syntax:

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="WARN">

<Appenders>

<Console name="Console" target="SYSTEM_OUT">

<PatternLayout pattern="%d{HH:mm:ss.SSS} [%t] %-5level %logger{36} trace_id=%X{trace_id} span_id=%X{span_id} trace_flags=%X{trace_flags} - %msg%n"/>

</Console>

</Appenders>

<Loggers>

<Root level="INFO">

<AppenderRef ref="Console"/>

</Root>

</Loggers>

</Configuration>

Injected Context Fields

When a span is active, these keys are automatically added to Log4j's context (MDC):

| Key | Description |

|---|---|

trace_id | The current trace ID |

span_id | The current span ID |

trace_flags | Trace flags (e.g., sampling decision) |

Customizing Field Names

Customize the injected field names via system properties or environment variables:

| System Property | Environment Variable | Default |

|---|---|---|

otel.instrumentation.common.logging.trace-id | OTEL_INSTRUMENTATION_COMMON_LOGGING_TRACE_ID | trace_id |

otel.instrumentation.common.logging.span-id | OTEL_INSTRUMENTATION_COMMON_LOGGING_SPAN_ID | span_id |

otel.instrumentation.common.logging.trace-flags | OTEL_INSTRUMENTATION_COMMON_LOGGING_TRACE_FLAGS | trace_flags |

Baggage Support

Enable baggage propagation to MDC by setting:

export OTEL_INSTRUMENTATION_LOG4J_CONTEXT_DATA_ADD_BAGGAGE=true

Baggage entries will appear as baggage.<key> in the context data.

Using with Java Agent (Zero-Code)

If you're using the OpenTelemetry Java Agent, Log4j instrumentation is included automatically. No additional dependencies or code changes are needed.

java -javaagent:opentelemetry-javaagent.jar \

-Dotel.service.name=my-service \

-Dotel.exporter.otlp.endpoint=https://api.uptrace.dev:4317 \

-Dotel.exporter.otlp.headers=uptrace-dsn=<your-dsn> \

-Dotel.logs.exporter=otlp \

-jar your-app.jar

The agent automatically:

- Injects trace context into Log4j MDC (

log4j-context-data) - Captures log records and exports via OTLP (

log4j-appender)

Controlling Log4j Instrumentation

You can selectively enable or disable Log4j instrumentation:

| System Property | Description |

|---|---|

otel.instrumentation.log4j-appender.enabled | Enable/disable log export |

otel.instrumentation.log4j-context-data.enabled | Enable/disable MDC injection |

For example, to only inject trace context without exporting logs:

java -javaagent:opentelemetry-javaagent.jar \

-Dotel.instrumentation.log4j-appender.enabled=false \

-Dotel.instrumentation.log4j-context-data.enabled=true \

-jar your-app.jar

Spring Boot Starter

When using the OpenTelemetry Spring Boot Starter, add the OpenTelemetry appender to your log4j2.xml:

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="WARN" packages="io.opentelemetry.instrumentation.log4j.appender.v2_17">

<Appenders>

<OpenTelemetry name="OpenTelemetryAppender"/>

</Appenders>

<Loggers>

<Root>

<AppenderRef ref="OpenTelemetryAppender" level="All"/>

</Root>

</Loggers>

</Configuration>

Control the appender via application properties:

# Enable/disable Log4j OpenTelemetry appender

otel.instrumentation.log4j-appender.enabled=true

Complete Example

Here's a complete example combining tracing and logging:

import io.opentelemetry.api.GlobalOpenTelemetry;

import io.opentelemetry.api.trace.Span;

import io.opentelemetry.api.trace.Tracer;

import io.opentelemetry.context.Scope;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

public class OrderService {

private static final Logger logger = LogManager.getLogger(OrderService.class);

private final Tracer tracer;

public OrderService() {

this.tracer = GlobalOpenTelemetry.getTracer("OrderService", "1.0.0");

}

public void processOrder(String orderId) {

Span span = tracer.spanBuilder("processOrder")

.setAttribute("order.id", orderId)

.startSpan();

try (Scope scope = span.makeCurrent()) {

// Log with automatic trace correlation

logger.info("Processing order: {}", orderId);

validateOrder(orderId);

logger.debug("Order validated successfully");

chargePayment(orderId);

logger.info("Payment processed for order: {}", orderId);

fulfillOrder(orderId);

logger.info("Order fulfilled: {}", orderId);

} catch (Exception e) {

logger.error("Failed to process order: {}", orderId, e);

span.recordException(e);

throw e;

} finally {

span.end();

}

}

private void validateOrder(String orderId) {

Span span = tracer.spanBuilder("validateOrder").startSpan();

try (Scope scope = span.makeCurrent()) {

logger.debug("Validating order {}", orderId);

// Validation logic

} finally {

span.end();

}

}

private void chargePayment(String orderId) {

Span span = tracer.spanBuilder("chargePayment").startSpan();

try (Scope scope = span.makeCurrent()) {

logger.info("Charging payment for order {}", orderId);

// Payment logic

} finally {

span.end();

}

}

private void fulfillOrder(String orderId) {

Span span = tracer.spanBuilder("fulfillOrder").startSpan();

try (Scope scope = span.makeCurrent()) {

logger.info("Fulfilling order {}", orderId);

// Fulfillment logic

} finally {

span.end();

}

}

}

JSON Logging for Kubernetes

For production environments, especially Kubernetes deployments, use JSON logging for structured output that can be easily parsed by log collectors:

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="WARN">

<Appenders>

<Console name="Console" target="SYSTEM_OUT">

<JsonTemplateLayout eventTemplateUri="classpath:LogstashJsonEventLayoutV1.json">

<EventTemplateAdditionalField

key="trace_id"

value="${ctx:trace_id}"/>

<EventTemplateAdditionalField

key="span_id"

value="${ctx:span_id}"/>

<EventTemplateAdditionalField

key="trace_flags"

value="${ctx:trace_flags}"/>

</JsonTemplateLayout>

</Console>

</Appenders>

<Loggers>

<Root level="INFO">

<AppenderRef ref="Console"/>

</Root>

</Loggers>

</Configuration>

Add the JSON template layout dependency:

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-layout-template-json</artifactId>

<version>2.24.0</version>

</dependency>

See the Kubernetes stdout logging example for a complete end-to-end demonstration.

What is Uptrace?

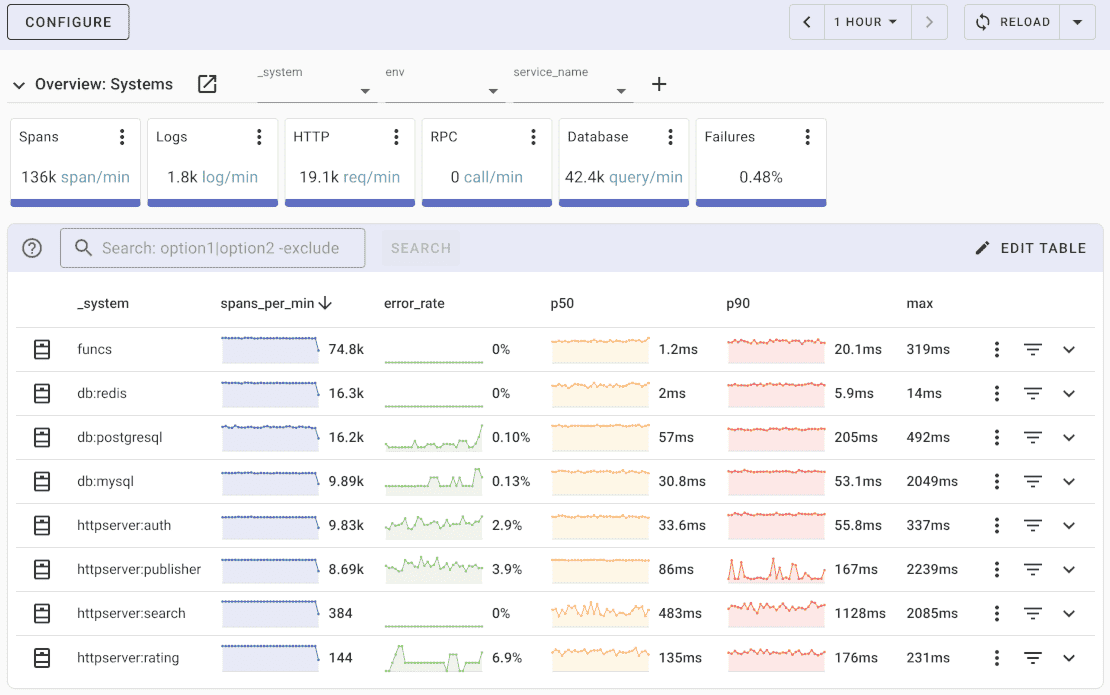

Uptrace is a OpenTelemetry APM that supports distributed tracing, metrics, and logs. You can use it to monitor applications and troubleshoot issues.

Uptrace comes with an intuitive query builder, rich dashboards, alerting rules with notifications, and integrations for most languages and frameworks.

Uptrace can process billions of spans and metrics on a single server and allows you to monitor your applications at 10x lower cost.

In just a few minutes, you can try Uptrace by visiting the cloud demo (no login required) or running it locally with Docker. The source code is available on GitHub.

What's next?

Log4j is now integrated with OpenTelemetry, providing automatic trace correlation and export capabilities. For alternative logging frameworks in Java, explore Logback integration. For complete Java instrumentation, see the OpenTelemetry Java guide, Spring Boot integration, or Quarkus integration.