Golang Monitoring using OpenTelemetry

When it comes to monitoring Golang applications, there are various tools and practices you can use to gain insights into your application's performance, resource usage, and potential issues.

By using OpenTelemetry for monitoring in your Go applications, you can gain valuable insights into the behavior, performance, and resource utilization of your distributed systems, allowing you to troubleshoot issues, optimize performance, and improve the overall reliability of your software.

What is OpenTelemetry?

OpenTelemetry is an open source project that aims to provide a set of vendor-neutral, standardized APIs and instrumentation libraries for collecting distributed traces, metrics, and logs.

OpenTelemetry is designed to facilitate observability in cloud-native and microservices architectures, where applications are often composed of multiple services that communicate with each other.

OpenTelemetry provides a standardized way to capture observability signals:

- Metrics indicate that there is a problem.

- Traces tell you where the problem is.

- Logs help you find the root cause.

What is distributed tracing?

OpenTelemetry provides distributed tracing, allowing you to follow the flow of a single request as it propagates through different services in a distributed system. Traces provide a comprehensive view of the interactions between different components, helping you understand performance bottlenecks and troubleshoot problems.

OpenTelemetry tracing works by instrumenting your application code to create and manage distributed traces. A trace represents the flow of a single request as it propagates through different services and components in a distributed system.

Traces are composed of spans, which represent individual operations or stages within the trace. Each span captures timing and contextual information to help you understand the behavior and performance of your application.

Traces

OpenTelemetry Go provides a robust API for instrumenting your Go applications to create and manage distributed traces. It allows you to create spans that represent individual operations or stages within a trace, and these spans capture timing and contextual information.

In the following example, we use the Start method from the tracer to create spans. The Start method returns a new context with the created span, which is then passed to other functions to propagate the context throughout your application.

package main

import (

"context"

"time"

"go.opentelemetry.io/otel"

)

func main() {

// Start a new root span representing the entire request.

tracer := otel.Tracer("my-tracer")

ctx, span := tracer.Start(context.Background(), "my-root-span")

defer span.End()

// Simulate some work.

doWork(ctx)

}

func doWork(ctx context.Context) {

// Create a child span to represent a processing stage.

tracer := otel.Tracer("my-tracer")

ctx, span := tracer.Start(ctx, "my-child-span")

defer span.End()

// Simulate some work.

time.Sleep(100 * time.Millisecond)

}

For production applications, OpenTelemetry provides ready-to-use instrumentation for popular Go web frameworks like Echo and Beego, as well as database libraries including GORM and database/sql. These instrumentations automatically create spans for HTTP requests and database queries, making it easy to get comprehensive tracing coverage.

Tracing data is typically exported to an OpenTelemetry backend or storage system for analysis and visualization.

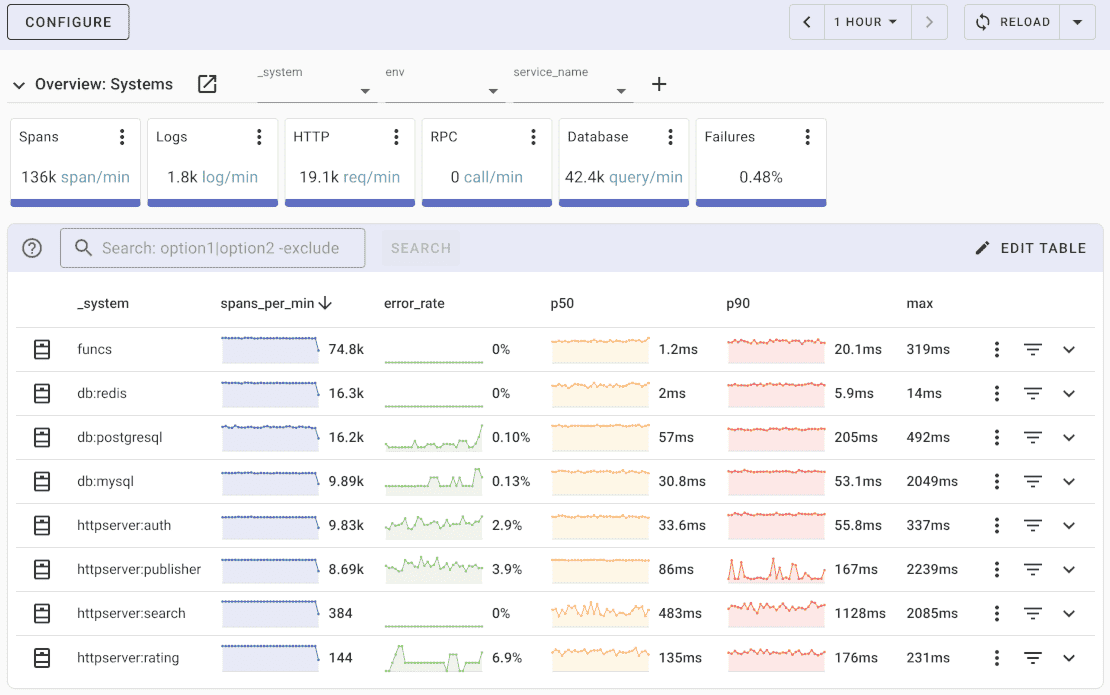

Uptrace is a distributed tracing tool that supports distributed tracing, metrics, and logs. You can use it to monitor applications and troubleshoot issues.

Uptrace comes with an intuitive query builder, rich dashboards, alerting rules with notifications, and integrations for most languages and frameworks.

Uptrace can process billions of spans on a single server, allowing you to monitor your software at 10x less cost.

Uptrace provides an OpenTelemetry distributive for Go that simplifies OpenTelemetry SDK configuration. Here is how you can use it to configure OpenTelemetry Go to export spans and metrics to Uptrace:

import "github.com/uptrace/uptrace-go/uptrace"

uptrace.ConfigureOpentelemetry(

// copy your project DSN here or use UPTRACE_DSN env var

//uptrace.WithDSN("<FIXME>"),

uptrace.WithServiceName("myservice"),

uptrace.WithServiceVersion("v1.0.0"),

uptrace.WithDeploymentEnvironment("production"),

)

Metrics

OpenTelemetry Metrics provides a standardized way to collect and record metrics from your application. Metrics are essential for monitoring the health and performance of your services, helping you gain insight into your application's resource utilization and behavior.

Here's an example of how you can use OpenTelemetry metrics to create and record a counter in your Go application:

package main

import (

"context"

"fmt"

"time"

"go.opentelemetry.io/otel"

"go.opentelemetry.io/otel/exporters/stdout"

"go.opentelemetry.io/otel/metric"

)

var requestsCounter = metric.Must(meter).NewInt64Counter("requests")

func main() {

// Create a new stdout exporter to print metrics to the console.

exporter, err := stdout.NewExporter(stdout.WithPrettyPrint())

if err != nil {

panic(err)

}

// Create a new meter provider with the stdout exporter.

meter := otel.GetMeterProvider().Meter("example")

// Increment the counter to simulate an event.

requestsCounter.Add(context.Background(), 1)

// Wait for a few seconds to see the metric output.

time.Sleep(5 * time.Second)

}

Logs

Monitoring logs in Go applications is crucial for identifying issues, understanding application behavior, and troubleshooting problems.

While traces and metrics provide valuable insights into system performance, logs offer detailed context and information about specific events, errors, and application behavior.

It is important to use a logging library that supports structured logging. Structured logging allows you to log key-value pairs or JSON objects instead of plain text messages. This makes it easier to parse and analyze logs programmatically.

Here's an example of how structured logging looks like in JSON format:

{

"timestamp": "2023-08-02T12:34:56.789Z",

"severity": "INFO",

"service": "example",

"component": "web",

"message": "User logged in",

"user_id": "12345",

"ip_address": "192.168.0.1"

}

Popular Go logging libraries that support structured logging are Logrus and Zap.

Logrus

Logrus is a popular logging library for Go that provides a simple and flexible API for logging in your applications. It is known for its ease of use and extensibility.

You can use OpenTelemetry Logrus instrumentation to use Logrus in your applications:

import (

"github.com/uptrace/opentelemetry-go-extra/otellogrus"

"github.com/sirupsen/logrus"

)

// Instrument logrus.

logrus.AddHook(otellogrus.NewHook(otellogrus.WithLevels(

logrus.PanicLevel,

logrus.FatalLevel,

logrus.ErrorLevel,

logrus.WarnLevel,

)))

// Use ctx to pass the active span.

logrus.WithContext(ctx).

WithError(errors.New("hello world")).

WithField("foo", "bar").

Error("something failed")

Zap

Zap is another popular logging library for Go, known for its high performance and low allocation overhead. Developed by Uber, Zap aims to provide fast and efficient logging for production use, making it a popular choice for high performance applications.

You can use OpenTelemetry Zap instrumentation to use Zap in your applications:

import (

"go.uber.org/zap"

"github.com/uptrace/opentelemetry-go-extra/otelzap"

)

// Wrap zap logger to extend Zap with API that accepts a context.Context.

log := otelzap.New(zap.NewExample())

// And then pass ctx to propagate the span.

log.Ctx(ctx).Error("hello from zap",

zap.Error(errors.New("hello world")),

zap.String("foo", "bar"))

// Alternatively.

log.ErrorContext(ctx, "hello from zap",

zap.Error(errors.New("hello world")),

zap.String("foo", "bar"))

Profiling

Profiling allows you to measure the performance and resource usage of your application. It helps you identify performance bottlenecks and areas that may need optimization.

Go provides built-in profiling tools that allow you to collect and analyze profiling data.

CPU profiling

CPU profiling is used to find out which functions use the most CPU time during the execution of your program. To enable CPU profiling in your Go application, you can use the runtime/pprof package:

package main

import (

"fmt"

"os"

"runtime/pprof"

)

func main() {

f, err := os.Create("cpu.prof")

if err != nil {

fmt.Println("Error creating file:", err)

return

}

defer f.Close()

pprof.StartCPUProfile(f)

defer pprof.StopCPUProfile()

// Your application code here...

}

Once the profiling is enabled, run your program, and it will generate a cpu.prof file. You can then analyze this file using the go tool pprof command-line tool.

Memory profiling

Memory profiling helps you understand memory usage patterns in your application and identify potential memory leaks. To enable memory profiling, you can again use the runtime/pprof package:

package main

import (

"fmt"

"os"

"runtime/pprof"

)

func main() {

f, err := os.Create("memory.prof")

if err != nil {

fmt.Println("Error creating file:", err)

return

}

defer f.Close()

pprof.WriteHeapProfile(f)

// Your application code here...

}

After running your program with memory profiling enabled, it will generate a memory.prof file. Analyze this file using the go tool pprof command-line tool.

Block profiling

Block profiling is used to find out how goroutines are blocked or waiting. This helps identify potential concurrency issues. To enable block profiling, you can use the runtime/pprof package:

package main

import (

"fmt"

"os"

"runtime/pprof"

)

func main() {

f, err := os.Create("block.prof")

if err != nil {

fmt.Println("Error creating file:", err)

return

}

defer f.Close()

pprof.Lookup("block").WriteTo(f, 0)

// Your application code here...

}

After running your program with block profiling enabled, it will generate a block.prof file. Analyze this file using the go tool pprof command-line tool.

Conclusion

OpenTelemetry Go is a valuable tool for Go developers who want to implement observability best practices in their applications.

With OpenTelemetry Go, developers can collect telemetry data, understand the behavior of their application, and ensure optimal performance and reliability in modern cloud-native and microservices environments.

You may also be interested in: