OpenTelemetry Python distro for Uptrace

This document explains how to configure the OpenTelemetry Python SDK to export spans (traces), logs, and metrics to Uptrace using OTLP/HTTP.

Choose Your Setup Path

Option A: Quick Start with uptrace-python

Best for: Getting started quickly, automatic configuration

uptrace-python is a thin wrapper over opentelemetry-python that configures the OpenTelemetry SDK to export data to Uptrace. It does not add any new functionality and is provided only for your convenience.

Option B: Direct OTLP Configuration

Best for: Existing OpenTelemetry users, custom exporters, fine-grained control

Quick Start Guide

Follow these steps to get your first trace running in 5 minutes:

Step 1: Create an Uptrace Project

Create an Uptrace project to obtain a DSN (Data Source Name), for example, https://<secret>@api.uptrace.dev?grpc=4317.

Step 2: Install uptrace-python

pip install uptrace

Step 3: Basic Configuration

You can configure the Uptrace client using a DSN (Data Source Name) from the project settings page. Replace <FIXME> with your actual Uptrace DSN, and myservice with a name that identifies your application.

import uptrace

from opentelemetry import trace

uptrace.configure_opentelemetry(

# copy your project DSN here or use UPTRACE_DSN env var

# dsn="<FIXME>",

service_name="myservice",

service_version="v1.0.0",

deployment_environment="production",

)

Step 4: Create Your First Trace

Copy the code to main.py:

#!/usr/bin/env python3

import uptrace

from opentelemetry import trace

from opentelemetry.trace import Status, StatusCode

# Configure OpenTelemetry with sensible defaults.

uptrace.configure_opentelemetry(

# copy your project DSN here or use UPTRACE_DSN env var

# dsn="<FIXME>",

service_name="myservice",

service_version="1.0.0",

)

# Create a tracer. Usually, tracer is a global variable.

tracer = trace.get_tracer("app_or_package_name", "1.0.0")

# Create a root span (a trace) to measure some operation.

with tracer.start_as_current_span("main-operation") as main:

# The context manager handles ending the span automatically.

# Create a child span for an HTTP operation.

with tracer.start_as_current_span("GET /posts/:id") as child1:

child1.set_attributes({

"http.method": "GET",

"http.route": "/posts/:id",

"http.url": "http://localhost:8080/posts/123",

"http.status_code": 200,

})

# Record an error

try:

raise ValueError("dummy error")

except Exception as exc:

child1.record_exception(exc)

child1.set_status(Status(StatusCode.ERROR, str(exc)))

# Create a child span for a database operation.

with tracer.start_as_current_span("SELECT") as child2:

child2.set_attributes({

"db.system": "mysql",

"db.statement": "SELECT * FROM posts LIMIT 100",

})

print(f"trace: {uptrace.trace_url(main)}")

# Send buffered spans and free resources.

uptrace.shutdown()

Step 5: Run Your Application

Run the code, replacing <FIXME> with your Uptrace DSN:

$ UPTRACE_DSN="<FIXME>" python main.py

trace: https://app.uptrace.dev/traces/<trace_id>

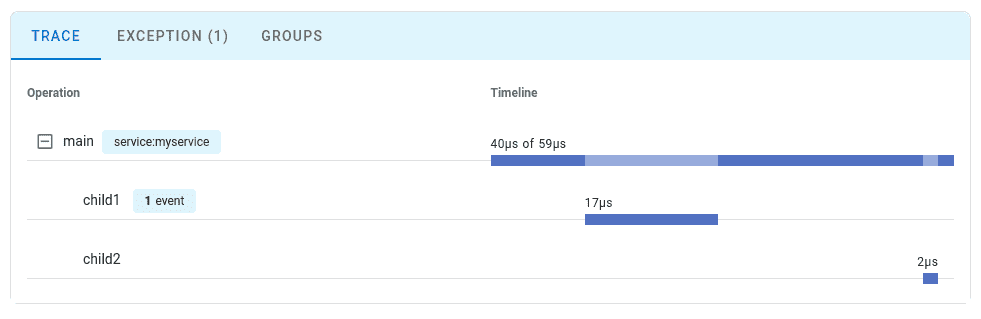

Step 6: View Your Trace

Follow the link to view the trace:

Configuration Options

You can find the full list of available options at PyPI.

| Option | Description |

|---|---|

dsn | A data source that specifies Uptrace project credentials. For example, https://<secret>@api.uptrace.dev?grpc=4317. |

service_name | service.name resource attribute. For example, myservice. |

service_version | service.version resource attribute. For example, 1.0.0. |

deployment_environment | deployment.environment resource attribute. For example, production. |

resource_attributes | Any other resource attributes. |

resource | Resource attributes representing an entity that produces telemetry. |

Application Servers

The BatchSpanProcessor spawns a background thread to export spans. This doesn't work well with application servers like Gunicorn and uWSGI that use a pre-forking model. During the fork, the child process inherits locks from the parent process, causing deadlocks.

To resolve this issue, configure OpenTelemetry using post-fork hooks:

Gunicorn

Use the post_fork hook:

# gunicorn.conf.py

import uptrace

def post_fork(server, worker):

uptrace.configure_opentelemetry(

service_name="myservice",

service_version="1.0.0",

)

uWSGI

Use the postfork decorator:

from uwsgidecorators import postfork

import uptrace

@postfork

def init_tracing():

uptrace.configure_opentelemetry(

service_name="myservice",

service_version="1.0.0",

)

What's Next?

Instrument more operations to get a detailed picture of your application. Prioritize network calls, database queries, errors, and logs.

By Use Case

| I want to... | Read this |

|---|---|

| Instrument without code changes | Zero-code instrumentation |

| Instrument my code with spans | Tracing API |

| Collect application metrics | Metrics API |

| Send logs to Uptrace | Logs integration |

| Enable distributed tracing | Context propagation |

| Reduce costs in production | Sampling strategies |

| Auto-detect cloud environment | Resource detectors |